Generative AI has rapidly evolved from a research breakthrough into a powerful force reshaping how businesses operate, innovate, and compete. But as organizations rush to harness Gen AI’s potential, they face an expanding set of risks—from data poisoning and adversarial attacks to hallucinations and security vulnerabilities in AI-generated code. In the following article, we unpack both the rewards and the realities of generative AI, offering a clear-eyed look at the technology’s origin, its vulnerabilities, and the critical role of governance in achieving responsible, value-driven adoption.

1.0 The origins of generative AI

1.1 Attention is all you need

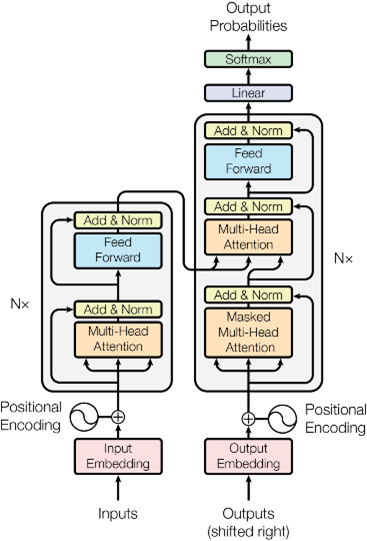

In 2017, eight Google scientists published a groundbreaking research paper titled “Attention Is All You Need.” This paper is widely regarded as a milestone in AI research, as it showcased the transformative potential of the transformer architecture and ignited the AI arms race.

In this landmark paper, Google’s scientists demonstrated that by relying exclusively on the transformer architecture, computer scientists could achieve breakthrough performance in natural language processing tasks without the need for complex, specialized models. In essence, they had discovered a machine learning architecture with the potential for general-purpose intelligence.

Encoder-decoder architecture (Source: Wikimedia Commons)

1.2 Open AI and the AI arms race

Startups in the AI ecosystem took note of Google’s accomplishments. In 2018, just one year later, OpenAI introduced an enhanced version of the General Purpose Transformer network. This model, known as GPT-1, featured 117 million parameters and was trained on 4.5 GB of text from the BookCorpus dataset.

Between 2019 and 2023, OpenAI built successively larger and more powerful versions of General Purpose Transformer models using successively larger training sets and successively larger amounts of compute. GPT-2, released in 2019, contained 1.5 billion parameters and was trained on 40GB of text. GPT-3, which contained 175 billion parameters, was trained on more than 570 GB of data, much of it scraped from the web.

GPT-4, which arrived in 2023, contained an estimated 1.7 trillion parameters, and was trained on an entirely undisclosed dataset. Since 2018, AI startups have aggressively expanded the scale of their models, following a principle known as the Scaling Law—the idea that increasing compute power, training data, and model size leads to more capable AI systems.

However, this relentless pursuit of scale comes with a critical vulnerability. Modern neural networks depend on vast amounts of training data, much of it sourced from the open web, and this reliance introduces a major security risk: the potential for a large-scale supply chain attack.

Article continues below.

TOOL

AI adoption is accelerating across teams, but are your policies, data practices, and oversight keeping pace? Use our AI Governance Readiness Checklist to pinpoint where you’re exposed and where you’re prepared.

2.0 Supply chain attacks

2.1 Adversarial machine learning

Scientists have long known that AI systems can easily be tricked. The study of the methods and defense of these tricks is called adversarial machine learning. Unsurprisingly, the first examples of adversarial machine learning come from the study of email spam filters. At the MIT Spam Conference in January 2004, John Graham-Cumming showed that a machine-learning spam filter could be used to defeat another machine-learning spam filter by automatically learning which words to add to a spam email to get the email classified as not spam.

2.2 Data poisoning

While it’s possible to corrupt the outputs of a model using corrupted inputs, researchers have also shown that you can “poison” a machine learning model using intentionally corrupted training data. By “poisoning” the training data, you confuse a machine learning model or even train it to produce incorrect results. The most insidious of these risks is a backdoor attack in which hackers train a machine learning model to produce incorrect results using a secret set of inputs.

AI training data poisoning illustration (Nightshade) (Source: Wikipedia)

The most well-known example of data poisoning is a tool called Nightshade. Built and documented in a research paper published in 2023, Nightshade allows users to invisibly tweak images to degrade the performance of text-to-image models. Originally designed as a tool for artists who were frustrated by their images being used by AI businesses without their permission, Nightshade allows users to generate works that corrupt and confuse models that are trained on them. As an illustration of how Nightshade works, if a model is trained on enough poisoned examples, a prompt that asks for an image of a dog fetching a ball might instead get an image of a cat sitting on a windowsill.

2.3 The hidden risks of AI code generation

While data poisoning in text-to-image applications poses a disruptive, but ultimately benign risk, AI code generation poses a potentially devastating risk. It’s not implausible to imagine that one day a state-sponsored actor will leverage data poisoning to introduce security vulnerabilities into software, operating systems, control systems. The way a state-sponsored actor might do this is simple and straightforward:

- Knowing that AI startups need more and more data to train their models, a state-sponsored actor could create a series of GitHub repositories containing poisoned code.

- This code will then make its way into AI startups’ training datasets by way of web scraping and data-sharing agreements.

- Models that are trained on this data will become poisoned with backdoors that introduces security vulnerabilities only in the context of certain phrases, such as “industrial control center.”

- Developers leveraging these AI coding tools unwittingly copy and paste these critical vulnerabilities into your codebase.

Businesses can prevent supply chain attacks by using only vetted LLM providers, and if possible, only select providers who can supply a bill of materials for their LLM training data. The best way to prevent supply chain attacks is to understand the bill of materials.

While cases as dramatic as these will be few and far between, businesses should be most on the lookout for unintentional vulnerabilities. A 2022 study found that workers who use coding assistants are more likely to introduce bugs and vulnerabilities. What is more concerning is that participants with access to an AI assistant were more likely to believe they wrote secure code than those without access to the AI assistant.

It is important that businesses find ways to prevent these vulnerabilities from making it into production, such as employing static code-scanning tools such as Checkmarx and training their developers on best practices for interacting with AI coding tools.

2.4 Preventing code vulnerabilities

LLM-induced code vulnerabilities can be prevented through effective generative AI governance. Businesses can prevent Gen AI from introducing code vulnerabilities by becoming aware of the risk of supply chain attacks for LLMs and creating policies that restrict usage of LLMs to a list of pre-approved vendors. LLMs with untraceable lineages from open-source providers may be excluded from use.

Another concern is that unsuspecting engineers may trust AI-generated code without verifying its security implications, leading to issues like insecure authentication, hardcoded secrets, or injection vulnerabilities. Businesses should establish secure coding guidelines, AI code review processes, and security training to ensure engineers critically assess AI-suggested code before integration.

Lastly, when it comes to developing policies related to the use of AI-generated code, the unique risk profile of each domain should be taken into account. In critical infrastructure applications, businesses may decide that the threat of AI-induced code vulnerabilities looms too large to employ AI coding tools, but in the case of front-end web development, they may decide that secure coding guidelines, AI code review processes, and security training have reduced the risk to a sufficient degree.

Businesses can prevent supply chain attacks by using only vetted LLM providers, and if possible, only select providers who can supply a bill of materials for their LLM training data.

3.0 A taxonomy for generative AI risks

3.1 Risks of generative AI systems

Generative AI presents a wide range of risks that can be categorized along two key dimensions: the party affected (first party vs. third party) and the intent behind the interaction (benign vs. malicious). These risks can be further classified into present and emerging threats, each with significant ethical, legal, and societal implications.

Present risks primarily arise from unintended consequences of AI-generated content. One such concern is harmful information, where large language models (LLMs) produce misleading, inaccurate, or unqualified advice, potentially leading to material harm in areas such as healthcare, finance, or law.

Another immediate concern is harm against groups, where generative AI perpetuates biases, promotes discrimination, or reinforces exclusionary norms, leading to broader societal inequalities. Furthermore, the distribution of sensitive content presents a significant threat, particularly when LLMs expose private information, generate inferred personal data, or disseminate illicit materials. While user intent in these cases may vary, the impact on privacy and safety can be severe.

Beyond these present risks, emerging threats highlight the potential for generative AI to be exploited by malicious actors or to develop misaligned objectives. A more speculative but serious long-term concern is LLM misalignment, which causes it to interact unpredictably with other digital ecosystems such as search engines, messaging platforms, or marketplaces. This could lead to cascading risks, including large-scale business disruption.

As generative AI systems continue to evolve, it is essential to assess and mitigate these risks through responsible AI development, regulatory oversight, and robust ethical frameworks. Understanding both current and potential threats will help ensure that AI technologies are harnessed for beneficial applications while minimizing harm to individuals, communities, and society at large.

3.2 Vulnerabilities in generative AI systems

In addition to categorizing the risks associated with generative AI, it is important to examine the underlying vulnerabilities that contribute to these risks. These vulnerabilities expose AI models to risks such as unreliable outputs, susceptibility to manipulation, and misaligned behaviors when integrated into external systems.

Unreliability is a fundamental vulnerability in AI systems, occurring when models unintentionally produce harmful or undesirable results. Even when users interact with an AI model as intended, without malicious intent, the system may generate misleading information, unqualified advice, or biased and discriminatory content. Additionally, unreliable models may inadvertently expose sensitive or confidential information, leading to privacy concerns. This vulnerability underscores the challenge of ensuring that generative AI systems consistently provide accurate, fair, and responsible outputs.

Another critical vulnerability is susceptibility to adversarial prompts, where users intentionally manipulate AI models to generate unintended responses. Attackers may exploit techniques such as prompt injection, encoded inputs, or the insertion of hidden triggers to bypass content safeguards. These adversarial strategies continue to evolve, requiring ongoing research and red-teaming efforts to identify and mitigate emerging threats. Without robust defenses against adversarial attacks, AI systems remain vulnerable to manipulation, increasing the likelihood of misinformation, harmful content, or unauthorized actions.

Finally, misaligned agency presents a growing risk as AI models are deployed in environments where they have access to tools and external systems. When an LLM operates autonomously or executes tasks beyond user intentions, it can cause unintended or even harmful outcomes. The severity of this vulnerability escalates as AI systems gain broader access to real-world tools and decision-making processes, raising concerns about their alignment with human values and control. Addressing misaligned agency is critical to ensuring that AI-driven automation remains safe, predictable, and aligned with intended objectives.

By understanding these vulnerabilities, organizations can take proactive steps to mitigate risks through robust AI governance, continuous monitoring, and improved alignment techniques. As AI systems continue to evolve, addressing these weaknesses will be crucial to ensuring their safe and ethical deployment.

As generative AI systems continue to evolve, it is essential to assess and mitigate these risks through responsible AI development, regulatory oversight, and robust ethical frameworks.

4.0 The risks of human-AI interactions

4.1 AI hallucination

A 2024 study by Stanford RegLab and the Center for Human-Centered AI found that AI hallucination rates ranged from 69 to 88 percent when responding to complex legal queries. Since then, several LLMs tailored for the legal market have emerged, leading to a significant reduction in hallucination rates—down to 17–34%, according to Stanford researchers.

While this progress is noteworthy, even a single AI-generated hallucination in the legal profession can have serious consequences. For example, Morgan & Morgan, the 42nd largest law firm in the United States, was sanctioned in 2025 after presenting fabricated case citations generated by AI. Improper handling of AI hallucinations can expose businesses to legal liability as well as tarnish their reputations. Businesses should focus on prevention, mitigation, and measurement to reduce the risk of AI hallucinations impacting key business processes. The next three subsections will show businesses how they can protect themselves from these risks.

Prevention

Businesses can implement safeguards from AWS and Azure to enhance LLM reliability. Amazon Bedrock Guardrails, for example, uses automated reasoning to filter out over 75 percent of hallucinated responses in retrieval-augmented generation (RAG). Similarly, Azure AI Content Safety offers groundedness detection and automated correction for RAG applications.

Mitigation

For the foreseeable future, no system will be 100 percent effective at preventing AI hallucinations. Since there are no foolproof safeguards, businesses must train employees to recognize and prevent the spread of AI-generated misinformation. Mandatory training will equip users with the skills they need to spot hallucinations. Additionally, workers using AI in highly regulated fields such as finance, medicine, and law, should be given extra training as the consequences for passing off hallucinations are greater in these fields.

Measurement

While measuring hallucination rates requires human effort, it provides a crucial measurement of risk and a baseline for improvement. Tools such as Amazon SageMaker Clarify and Amazon GroundTruth enable executives to benchmark hallucination rates and determine whether their use case is ready for generative AI. Businesses should prioritize high-risk applications for hallucination measurement.

4.2 Mitigating the risks of hallucinations and human-AI interactions

A well-structured governance program is essential for mitigating the risks associated with Generative AI hallucinations. Effective governance frameworks ensure that AI deployments align with risk tolerance levels by integrating prevention, mitigation, and measurement strategies.

By leveraging safeguards like Amazon Bedrock Guardrails and Azure AI Content Safety, organizations can significantly reduce hallucination rates in retrieval-augmented generation (RAG) applications. However, technology alone is insufficient—governance programs must also emphasize employee training to recognize and counteract AI-generated misinformation.

Mandatory training sessions and risk assessments tailored to specific AI use cases help prevent erroneous outputs from influencing critical decisions. Additionally, governance programs should incorporate ongoing measurement through tools like Amazon SageMaker Clarify to establish benchmarks and track improvements over time. By embedding these principles into AI governance, businesses can enhance trust, reliability, and regulatory compliance while mitigating the operational risks posed by AI hallucinations.

5.0 Data security and privacy

LLMs consume and generate data much like many business applications, which means that security teams should be tasked with governing all of the data that enters and exists LLMs. Much like any other application, care should be taken to:

- Enable role-level security.

- Log and audit the usage of data.

- Evaluate LLM vendors for security compliance.

LLM vendor terms and services should be screened by legal departments to ensure that vendors are not taking advantage of company data, because many vendors regularly train their models on user queries and outputs. Businesses can also mitigate this risk by selecting cloud providers like Azure and AWS, which allow businesses leverage isolated instances of LLMs which are kept safe behind private networks.

6.0 Vulnerabilities in workflows and agents

As defined by LangChain, a major startup within the LLM agent space, “Workflows are systems where LLMs and tools are orchestrated through predefined code paths. Agents, on the other hand, are systems where LLMs dynamically direct their own processes and tool usage, maintaining control over how they accomplish tasks.” Workflows and agents are higher order versions of LLMs, and as such, they carry their own risks.

6.1 Workflows

Workflows operate in a directed fashion. They take predefined paths, and their behavior is easy to understand and debug.

A simple example of an LLM workflow is a weather app. Imagine an app that allows the user to ask about the weather using natural language.

- The user can prompt the app and ask, “What is the weather like near me today?”

- The app then converts the user’s request into data, which it then sends to an API endpoint.

- The workflow then receives the result from the API, parses it into human-readable format, and returns the result to the user.

RAG schema (Source: Wikimedia Commons)

Like all LLM-powered applications, workflows can be easily exploited through prompt injection, in which a malicious actor tricks LLMs into ignoring system instructions and performing unexpected or prohibited actions.

In February 2023, Bing released their LLM-powered chatbot that allowed users to chat with a conversational AI. The next day, users were able to use prompt injection to trick Microsoft’s chatbot into revealing its system prompts. For workflows, the attack vector is effectively doubled because users can enable prompt injection either through the chat interface or through other digital ecosystems that workflows often access. Returning to the example of a weather app workflow, a hacker could use prompt injection to override the workflow and instead return critical configuration details about the weather API.

6.2 Agents

Agents operate in a decidedly unpredictable fashion. When a user gives an AI agent a task, it iteratively works on the task until it decides the task is complete. The behavior of agents is much more open-ended, and as of 2025, the most commonly use cases for agents are coding and research.

Within the coding domain, an agent might take a prompt from a user such as, “Build me a video game” and proceed to iteratively code, build, and test the program until the agent decides that it is complete. Coding agents pose a special risk because they have the ability to develop and execute code.

A malicious actor could leverage prompt injection to trick a coding agent into mining bitcoin, or they could use it to test for vulnerabilities within a company’s network. And because AI agents run in a deliberately unpredictable fashion, it can be even harder to detect something that has gone wrong.

6.3 Reducing the risks of workflows and agents

Businesses may want to consider prohibiting the use of AI workflows and agents in a customer-facing capacity, but even then, a workflow that sits behind company firewalls may still pose a threat to company security.

Consider a Workflow that ingests customer support tickets, identifies a solution, and notifies the responsible departments. A malicious actor could add prompt injection text to their support ticket to trick that workflow to send fraudulent invoices to the billing department.

Vendors are rolling out tools to monitor and prevent prompt injection. Businesses can use tools such as AWS Bedrock Guardrail or Azure Prompt Shield to detect and prevent prompt injection; however, it’s important to realize that these services themselves are often machine learning classifier models, and therefore they themselves are not 100 percent foolproof.

In addition to leveraging prompt screening services, businesses should design workflows and agents from a perspective of least privileged security. Database, web, code execution, and API privileges for workflows and agents should be restricted in such a way that workflows and agents can do their jobs and nothing else. Businesses should also engage in periodic penetration testing in which teams of experts try to jailbreak workflows and agents.

6.4 Reducing the risks of agentic AI

Good governance is essential for mitigating the risks associated with generative AI copilots, workflows, and agents. Since AI workflows operate in predefined paths and agents function with greater autonomy, both pose security and compliance risks, including prompt injection attacks and unintended data exposure.

A robust governance framework should incorporate stringent access controls, enforce least-privileged security principles, and mandate periodic penetration testing to identify vulnerabilities. Additionally, organizations should implement AI-specific monitoring tools like AWS Bedrock Guardrail or Azure Prompt Shield while recognizing their limitations.

Governance should also extend to establishing clear accountability structures, ensuring AI-driven decisions align with business objectives, ethical standards, and regulatory requirements. Governance-driven development is a framework that will allow businesses to remain competitive while striking a balance between innovation and risk.

7.0 Risks, rewards, and the value of AI governance

7.1 The value of generative AI

Thirty-three percent of employees and 44 percent of CEOs use ChatGPT are daily users of ChatGPT. In 2023, OpenAI’s ChatGPT app achieved 100 million users in just two months. By comparison, it took TikTok nine months after its global launch to reach 100 million users and Instagram 2-1/2 years.

While the risks of generative AI are looming, the value is undeniable. LLMs are driving productivity across industries, enabling businesses to scale their operations, reduce costs, and improve efficiency. Early adopters across sectors such as software development, retail, and customer service have already seen measurable productivity gains, and the overall economic implications are substantial. As organizations continue to integrate LLMs into their operations, the potential for major economic impact is becoming increasingly clear.

In software development, LLMs have proven to significantly enhance coding productivity. An analysis of over 934,000 GitHub Copilot users sponsored by Harvard Business Review and GitHub revealed that, on average, developers accepted nearly 30 percent of the AI-generated code suggestions. According to Harvard Business School, this surge in developer productivity could contribute more than $1.5 trillion USD to the global GDP by 2030.

Even traditionally conservative players such as the retail sector are already seeing gains in productivity from Generative AI. In a recent earnings call, the CEO of Walmart stated that they are using large language models to improve the quality of over 850 million data points in their product catalog—a task that would have required nearly 100 times the current headcount to achieve manually.

In customer service, Klarna deployed an AI assistant to handle a substantial portion of customer interactions. According to a recent press release, Klarna’s AI has conducted 2.3 million conversations, accounting for two-thirds of Klarna’s customer service chats. Meanwhile, Klarna’s AI is comparable to human agents in terms of customer satisfaction while being more accurate in resolving customer inquiries, resulting in a 25 percent reduction in repeat inquiries. Lastly, Klarna’s AI assistant has reduced average resolution times from 11 minutes to under 2 minutes.

In 2025, the biggest applications for AI agents belong to the coding and customer service spaces. ServiceNow recently released its AI Agent Orchestrator, which oversees teams of specialized AI agents as they work together across tasks, systems, and departments to achieve a specific goal, such as fielding an employee software request. Meanwhile, platforms like Cursor, Replit, and Windsurf allow users to develop entire applications from scratch using a single prompt.

7.2 Risk-reward matrices

Businesses looking to adopt generative AI should carefully evaluate potential use cases by weighing the risks and rewards. The reward side of the equation involves understanding how AI can drive efficiency, innovation, and cost savings. Organizations should prioritize use cases that offer clear value, whether through automating content creation, improving customer interactions, enhancing decision-making, or streamlining operations. However, they must also assess the risks.

Risk is inherently tied to the regulatory environment of a given domain. In highly regulated industries such as healthcare, finance, or energy, AI applications must comply with stringent legal and ethical standards, increasing the complexity and potential liabilities. For example, AI-generated financial advice must adhere to financial compliance regulations, while AI-driven healthcare applications must meet patient privacy and safety standards. Businesses operating in these spaces need to conduct rigorous risk assessments, ensuring that AI implementations do not inadvertently introduce bias, misinformation, or security vulnerabilities.

Lastly, risk is also tied to the technology itself. Copilots, workflows, and agents unlock ever more powerful use cases, but their power is a risk in and of itself. All AI systems make mistakes, and the potential to do harm is greater for AI systems that are tasked with diagnosing medical conditions and analyzing financial markets. Understanding these risks early allows organizations to proactively implement controls that mitigate potential harm while still capturing the value of AI.

7.3 How governance tips the risk-reward scale

Proper governance is essential for responsibly deploying generative AI. Organizations should establish a robust AI governance framework that includes clear policies on data usage, model transparency, bias detection, and human oversight. Governance structures should align with industry regulations and best practices, ensuring AI-generated outputs are explainable, auditable, and aligned with ethical considerations.

Additionally, businesses should implement monitoring mechanisms to track AI performance and detect unintended consequences over time. By embedding governance into the AI adoption process, businesses can strike the right balance between innovation and risk management, ultimately ensuring that AI-driven solutions are both effective and responsible.

8.0 Setting up an AI governance program

8.1 Keep the goal in mind

Setting up a generative AI governance program requires a structured yet agile approach to managing risks while maximizing value. First, organizations should establish a cross-functional AI governance committee comprising stakeholders from IT, risk management, legal, compliance, and business units. This committee should define clear policies on AI usage, vendor selection, and data security, ensuring compliance with industry regulations and internal risk thresholds. Key elements include restricting AI usage to vetted providers, implementing secure coding guidelines, and deploying static code analysis tools to mitigate vulnerabilities introduced by AI-generated code. Additionally, organizations should enforce robust data privacy measures, including access controls, audit logs, and contractual agreements with AI vendors to prevent unintended data exposure.

Next, organizations must implement technical safeguards to address risks such as AI hallucinations, prompt injection, and adversarial attacks. This involves leveraging AI monitoring tools like AWS Bedrock Guardrails, Azure AI Content Safety, and automated reasoning frameworks to detect and mitigate AI errors. Regular security audits, penetration testing, and model evaluations should be incorporated into the governance framework to proactively identify vulnerabilities in workflows and AI agents. To further enhance security, businesses should adopt least-privileged access models, ensuring AI systems only have the necessary permissions to perform their intended tasks, thereby reducing exposure to exploitation.

Lastly, fostering a culture of responsible AI usage through employee training and continuous risk assessment is crucial. Employees interacting with AI systems should be trained to recognize AI-generated errors, biases, and security risks, ensuring that they critically assess outputs before integration into decision-making processes. Additionally, organizations should conduct ongoing risk-reward evaluations for AI deployments, weighing productivity gains against potential operational, legal, and reputational risks. By embedding these governance principles, businesses can responsibly harness generative AI’s potential while minimizing its risks.

8.2. From zero to one

The AI revolution isn’t waiting, and neither are today’s businesses. Companies should prioritize setting up foundational elements that can be implemented swiftly while laying the groundwork for a more comprehensive governance program. Here’s how to begin:

Define clear policies and guidelines: The first step is to establish clear, actionable AI governance policies that include usage guidelines, security protocols, and acceptable AI vendors. This can be done quickly by creating a “playbook” or policy document that outlines who can use AI, under what conditions, and which vendors and tools are approved. Ensuring that AI-generated outputs meet specific quality and compliance standards is critical here. By selecting trusted AI providers and limiting AI usage to specific business areas, organizations can immediately begin to mitigate risks like data poisoning and hallucinations.

Implement basic security controls: Companies should start with essential security measures such as data access controls, role-based security, and logging mechanisms for AI data and interactions. A quick win would be leveraging existing tools like AWS Bedrock Guardrails or Azure Content Safety for immediate protection against hallucinations and prompt injections. By introducing these technical safeguards, companies can quickly reduce immediate risks associated with AI interactions, such as misinformation or vulnerabilities in AI-generated code.

Train key teams and set up a risk assessment framework: Rapid training of employees and teams interacting with AI tools is essential for immediate results. To start seeing results, companies should also implement a straightforward risk-reward matrix for their most immediate AI use cases, assessing which ones offer high value with manageable risk. This allows for prioritizing projects that can bring quick, tangible benefits while keeping a close eye on compliance and security from the get-go.

These initial steps will help businesses quickly reduce risks and begin seeing the value of AI adoption, while creating a solid foundation for building out a full governance program over time.

9.0 Final thoughts

Businesses across the globe are eager to adopt generative AI to stay competitive, drive efficiency, and enhance customer experiences. However, without a strong governance framework, AI adoption can be slowed by security concerns, compliance risks, and ethical uncertainties. At Logic20/20, we believe that a well-defined generative AI governance strategy not only mitigates these risks but also accelerates AI adoption by providing clear guidelines for responsible deployment. By proactively addressing challenges such as prompt injection, data security, and unintended biases, businesses can confidently integrate AI into their workflows while maintaining trust with customers and stakeholders. Our expertise in generative AI enables organizations to implement governance structures that streamline decision-making, foster cross-functional collaboration, and ensure compliance with evolving regulations—allowing them to innovate faster and more securely.

Accelerate innovation with AI-powered solutions

Logic20/20 helps you turn artificial intelligence into a competitive advantage through strategic planning and responsible implementation. Our experts deliver actionable insights and real-world value through:

- AI strategy & use case development

- Machine learning model design

- Responsible AI frameworks

- AI program implementation & scaling