6-minute read

Quick summary: How financial institutions are navigating the adoption of AI, balancing innovation with risk management to drive strategic growth and long-term value

The financial services industry is undergoing a pivotal shift in how it approaches artificial intelligence. While adoption to date has been measured, momentum is building as decision makers begin moving beyond isolated pilots and into more strategic discussions about enterprise impact. Across banks, investment firms, insurers, and other financial institutions, leaders are balancing the promise of AI with the realities of compliance, data privacy, and institutional risk.

At the same time, the competitive landscape is shifting. AI capabilities are evolving quickly, and stakeholder expectations are rising. For financial leaders, the challenge is to find a responsible path forward, one that protects core values while unlocking the strategic benefits of artificial intelligence.

In this article, we explore the current state of AI adoption in financial services, what’s driving momentum, where hesitation remains, and what leadership teams can do now to prepare for responsible, strategic growth.

Article continues below.

ASSESSMENT

Evaluate your AI readiness across five key areas: strategy alignment, data foundations, governance, talent, and operational integration.

The 5×5 AI Readiness Assessment reveals where your organization stands today and delivers a personalized report with recommended next steps.

The cautious pivot: Where financial services stand with AI in 2025

Momentum around AI in financial services is unmistakable—but so is the caution guiding its rollout. More than 80 percent of financial institutions report using AI in some form, yet most initiatives remain confined to low-risk test cases or internal tools. Generative AI has sparked curiosity, but practical applications are still largely limited to document drafting, internal search, and automation of repetitive tasks.

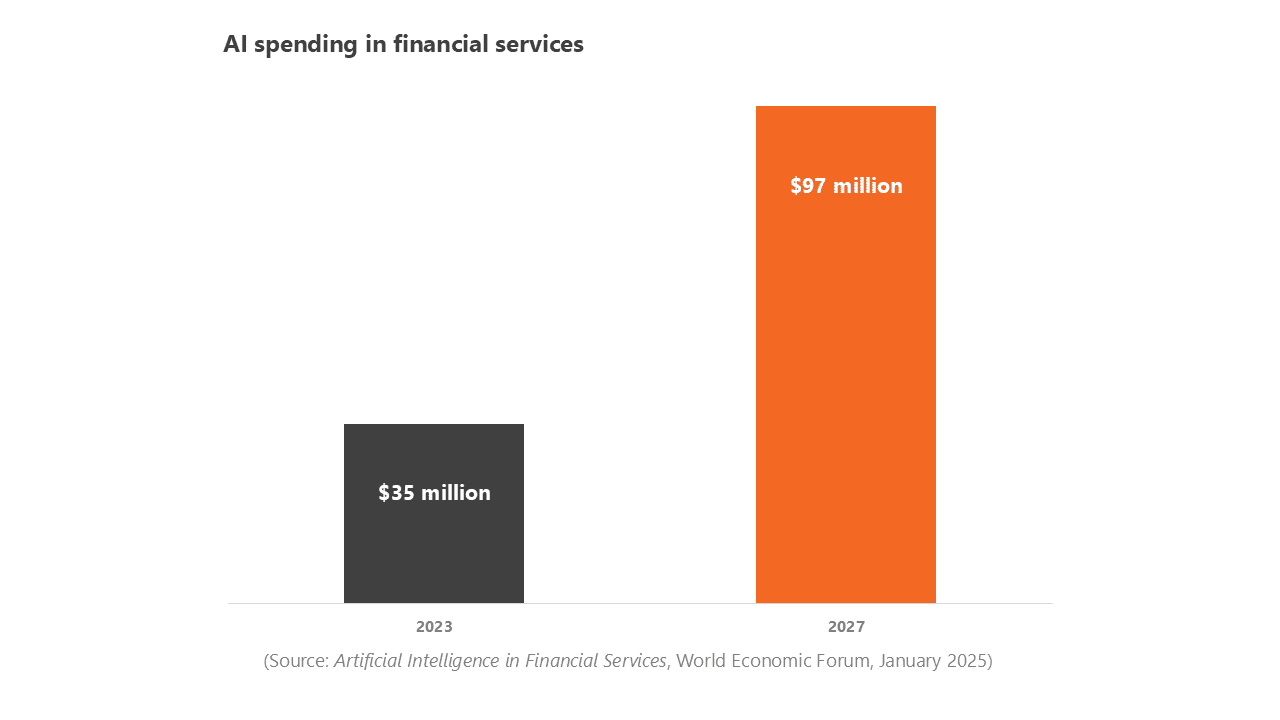

In 2023, the industry spent more than $35 billion on AI, with that figure projected to nearly triple by 2027. Still, fewer than one in three firms reports achieving significant return on those investments. The gap between ambition and execution reflects a broader hesitancy across the sector, shaped by tight regulations, institutional risk frameworks, and persistent concerns around privacy, bias, and explainability.

For many financial leaders, the strategy has been to observe early adopters, test cautiously in controlled environments, and delay broader rollouts until risks are better understood. While that approach aligns with the industry’s risk posture, it also raises the possibility of falling behind as peer institutions begin to move more decisively.

Despite these constraints, many institutions are identifying targeted use cases where AI can demonstrate value with limited risk.

Key use cases: Where AI is gaining traction

While enterprise-wide adoption remains limited, several high-impact use cases are showing early promise. These areas offer financial institutions a controlled environment to test AI capabilities, reduce manual workloads, and generate measurable returns—while steering clear of customer-facing functions or high-risk decision making.

Compliance as a proving ground

Regulatory compliance has emerged as one of the most practical entry points for AI in financial services. Tools that automate the review of transactions, track shifting regulatory requirements, and generate draft reports—such as Suspicious Activity Reports (SARs)—are already easing the burden on compliance teams. These systems can flag anomalies across vast datasets with far greater speed and consistency than manual processes allow, while maintaining essential human-in-the-loop oversight.

As noted in a recent Fast Company article by Logic20/20 COO Travis Jones, compliance is proving to be a prudent first step for many institutions: it’s high in volume, rules-based, and largely internal. Success here builds organizational confidence, reduces operational risk, and helps align AI efforts with board-level priorities.

Operational efficiency and automation

AI is also gaining ground in operational areas where automation and workflow optimization drive clear ROI. Early applications—including optical character recognition (OCR) for document processing and knowledge assistants that support call center agents—are already streamlining routine tasks, reducing error rates, and improving employee productivity. Some institutions are layering in sentiment analysis to better understand customer interactions, though typically only on anonymized, internal datasets.

While these efforts may not be transformative on their own, they are laying the technical and organizational foundation for broader, more strategic use of AI.

Customer experience and personalization

On the front end, a growing number of institutions is exploring AI to enhance customer engagement. Use cases include product recommendation engines, proactive financial coaching bots, and tools that tailor communications based on behavioral data. These applications remain relatively rare among large banks, where risk tolerance for customer-facing AI is still low. However, smaller institutions and digital-first players are beginning to test conversational agents and embedded AI assistants in limited scopes.

Taken together, these early use cases illustrate a cautious but forward-moving path in which financial services firms can build capability and confidence before taking on more advanced deployments.

Article continues below.

5×5 AI Readiness Assessment

Many AI initiatives stall after the pilot phase—not due to technology gaps, but because of misalignment across strategy, infrastructure, data, and governance. Our 5×5 AI Readiness Assessment gives you a clear view of your current state and offers next steps for scaling AI with measurable, lasting impact.

The emerging frontier: Agentic AI in financial services

While most institutions are still focused on foundational AI use cases, interest in agentic AI is beginning to grow. These systems go beyond simple automation to operate with a degree of autonomy. In financial services, potential applications include portfolio rebalancing based on market signals, autonomous customer service bots that manage complex interactions end to end, and coordinated AI agents that monitor compliance in real time.

As of 2025, only 6 percent of financial firms have deployed agentic AI in production environments, but 38 percent report plans to adopt it within the next 12 months. This suggests a growing interest as more organizations move from experimentation to implementation in use cases where autonomy can drive measurable value.

The potential benefits are significant: faster decision cycles, continuous service availability, and highly scalable personalization. But so are the risks. A misaligned autonomous model could trigger compliance violations, expose the brand to reputational harm, or produce outcomes that are difficult to explain or reverse. For that reason, most agentic AI development remains confined to controlled pilots, typically in fintech startups or high-frequency trading environments where speed and automation are core to the business model.

For traditional institutions, exploring agentic AI will require strong governance, robust oversight frameworks, and a clear understanding of where autonomy adds value without increasing exposure.

Why full-scale adoption remains elusive

Despite growing interest and isolated wins, several persistent challenges are keeping many financial institutions from scaling AI across the enterprise.

Data privacy and security remain at the top of the list. Given the sensitivity of customer financial data, even internal AI tools must pass rigorous scrutiny from compliance and cybersecurity teams. Concerns about exposing personally identifiable information (PII) or triggering regulatory violations have made many institutions hesitant to deploy models beyond tightly controlled environments.

Regulatory uncertainty also plays a major role. With AI-specific regulation still evolving, particularly around transparency and bias, many leaders are reluctant to invest heavily in systems that may soon need retooling. Only 9 percent of financial firms report feeling prepared for forthcoming AI regulations, underscoring a widespread readiness gap.

Meanwhile, AI governance practices are still maturing. While institutions recognize the need for explainable and auditable systems, few have fully developed the frameworks necessary to operationalize oversight at scale. Model risk management, version control, and escalation protocols are often still in development or siloed within specific business units.

Finally, skill gaps continue to slow momentum. The shortage of experienced AI talent—spanning data science, ethics, infrastructure, and regulatory fluency—makes it challenging for institutions to move quickly and confidently. Even promising projects can stall in review cycles if internal stakeholders lack the expertise to evaluate risks or validate outcomes.

Together, these barriers help explain why many AI efforts in financial services remain fragmented or confined to early-stage deployments.

Moving forward with confidence: Strategic actions for financial leaders

As AI capabilities advance, financial institutions that act with intention, prudence, and structure can gain a meaningful advantage. The goal isn’t speed for its own sake—it’s confidence, the ability to move forward with clear governance, informed leadership, and measurable outcomes.

Establish executive-level alignment and fluency

AI adoption is unlikely to succeed without leadership buy-in. For transformation to scale, decision makers must understand both the strategic opportunities AI presents and the operational and regulatory risks it introduces. Executive briefings, cross-functional working sessions, and AI fluency training can help close knowledge gaps and build a common language across business, technology, and compliance functions. These early steps set the tone for responsible experimentation and reduce the likelihood of stalled initiatives.

Start with low-risk, high-impact use cases

Internal-facing applications remain the best place to begin. Initial implementations in areas like compliance reporting, knowledge search, and document classification can yield measurable returns with relatively low exposure. These use cases also help establish internal proof points that build organizational momentum. For most institutions, it makes sense to avoid public-facing deployments or customer-impacting tools until governance and oversight mechanisms are firmly in place.

Embed governance and security from the start

Proactive governance is not just a safeguard—it’s an enabler. Institutions that build explainability, auditability, and model risk controls into their AI programs from the outset are better positioned to scale responsibly. Foundational tasks include defining ownership structures, establishing model review protocols, and ensuring that systems can be evaluated against evolving regulatory expectations. Many organizations benefit from working with partners like Logic20/20 who specialize in operationalizing responsible AI frameworks within highly regulated environments.

Use structured readiness frameworks to build momentum

An AI strategy gains power when it’s grounded in organizational readiness. Structured frameworks such as Logic20/20’s Executive AI Readiness Accelerator can help leadership teams assess maturity across key domains and align on high-value use cases. In just two days, the program delivers a strategic roadmap, a prioritized list of opportunities, and actionable next steps. For institutions seeking a lower-lift entry point, the AI Readiness Assessment provides a practical snapshot of current capabilities and a 90-day action plan tailored to specific business goals.

One global financial institution recently applied this structured, leadership-aligned approach to build a foundation for enterprise AI adoption. With support from Logic20/20, the organization assessed its data and technology environment, clarified strategic priorities, and established a roadmap for scalable implementation. As a result, the institution accelerated its readiness to operationalize AI across functions, reducing risk and enabling more confident decision making.

From experimentation to leadership

Financial institutions don’t need to rush into advanced AI—but they do need to have a plan. The competitive landscape is shifting quickly, and institutions that wait too long risk falling behind peers that are moving forward with structured, low-risk approaches. Leaders who take a structured, risk-aware approach can move forward at a pace that aligns with their organization’s risk tolerance while building the capabilities needed to meet rising stakeholder expectations.

Success lies in balancing innovation with governance. Institutions that take informed, deliberate steps—starting with internal use cases, investing in executive fluency, and embedding governance early—are best positioned to scale AI responsibly and effectively.

Logic20/20 helps financial services firms move from proofs-of-concept to production with clarity, security, and competitive advantage. Through structured readiness assessments and leadership-aligned frameworks, we support organizations in building AI strategies that are as resilient as they are innovative.