4-minute read

Quick summary: The rapid rise of ChatGPT is a wakeup call—and an opportunity—for leaders looking to ensure that AI-driven applications are developed responsibly, ethically, and transparently.

Artificial intelligence (AI) has been with us since the 1950s, and even generative AI finds its origins in the earliest chatbots of the 1960s. So when ChatGPT burst into the public arena in November 2022, it offered no new technologies; rather, it aggregated long-standing capabilities into a near-irresistible new package—a conversational intelligence tool that any user can access via any browser at no cost.

As ChatGPT captured the public imagination, it became clear that this new tool had potential as both a powerful productivity driver and a minefield of risk, particularly now that OpenAI has made the application available to development teams via APIs.

The disruption that ChatGPT created in the business world points to the larger issue of how organizations can benefit from AI while setting up guardrails against issues such as data privacy breaches and unintentional biases. The answer is an effective AI governance program, which enables organizations to bring generative AI programs into the fold of IT and allow usage within carefully designed parameters.

Why AI governance is important

AI governance creates a regulatory framework around the organization’s use of artificial intelligence to ensure that ChatGPT and other AI tools are used in a responsible, ethical, and transparent manner. Specifically, AI governance helps businesses avoid pitfalls in the following areas:

Security

Unsecured models and data sources leave companies at risk of data leakage and theft, threatening to expose customer and company secrets.

Privacy

Improperly designed models may disregard customer consent and may violate data privacy laws or damage the company’s reputation.

Legal and compliance

Illegal or non-compliant models may subject companies to significant monetary penalties.

Operations

Improperly tested and deployed models may perform adversely, and improperly monitored models may degrade over time, resulting in significant operational challenges.

Ethics

Models that have not properly been screened for bias may harm or discriminate against customers or employees, resulting in significant loss of reputation and legal liability.

Creating an AI governance board

Successful governance of any capability requires a standing governing body, and AI is no exception. An AI governance board can

- Establish AI ethics, privacy, and security standards

- Set project charters and KPIs

- Oversee and approve model production

It’s important to assemble a cross-functional board that includes a diverse representation of business concerns relevant to AI safety, including security, privacy, and corporate ethics.

Building an effective AI governance board is a multi-phase process that requires careful consideration at every turn, as the following chart illustrates:

- Define purpose and goals: Understand what roles the board will play in decision-making, strategic planning, oversight, and governance.

- Identify needed skills and expertise: Determine the skills, knowledge, and expertise required on the board to achieve the organization’s goals effectively.

- Develop board policies and procedures: This phase could include rules on board member selection, term limits, conflict-of-interest policies, meeting frequency, and decision-making processes.

- Recruit board members: Look for individuals who are committed to the organization’s mission and have a diverse representation of business units across the business.

- Formalize governance structure: Create and adopt formal governance documents, such as the organization’s bylaws or constitution.

- Develop strategic plan: Work with the board to develop a plan for executing on objectives and strategies.

- Monitor and evaluate performance: Regularly assess the board’s performance, its effectiveness, and the organization’s progress towards its goals. Tool selection is a key sub-phase here.

- Review and adapt: Periodically review the board’s structure, processes, and policies to ensure they remain relevant and effective in meeting the organization’s needs.

Incorporating AI governance processes

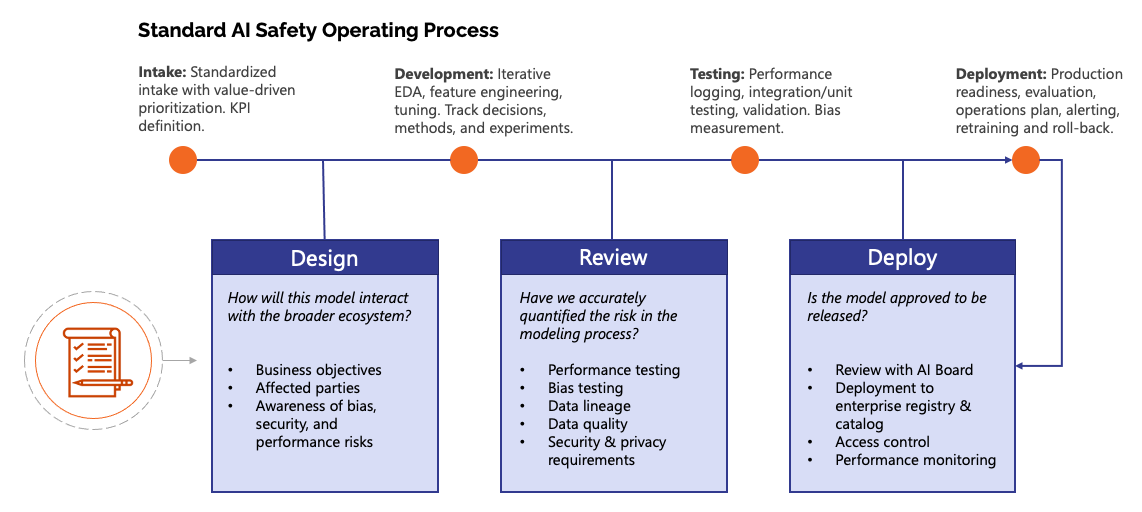

The AI governance process makes safety a standard of practice in the data science lifecycle. Here we’ve illustrated an example of how governance considerations can integrate into the development, testing, and deployment of AI models:

Be ready for ChatGPT—and whatever comes next

ChatGPT has brought generative AI within the fingertips of any user, creating a dilemma for leaders looking to manage the inherent risks while leveraging the productivity benefits. With a governance program that covers AI in all its forms and uses—from business users doing research to development teams adding ChatGPT to products through APIs—organizations can ensure that they’re ready to avoid the pitfalls of generative AI today … and be ready for the intelligent tools of the future.

Put your data to work for you

We bring together the four elements that transform your data into a strategic asset—and a competitive advantage: