Quick summary: How organizations can transform AI principles into actionable policies that drive innovation, ensure safety, and build trust through effective AI governance.

The state of AI

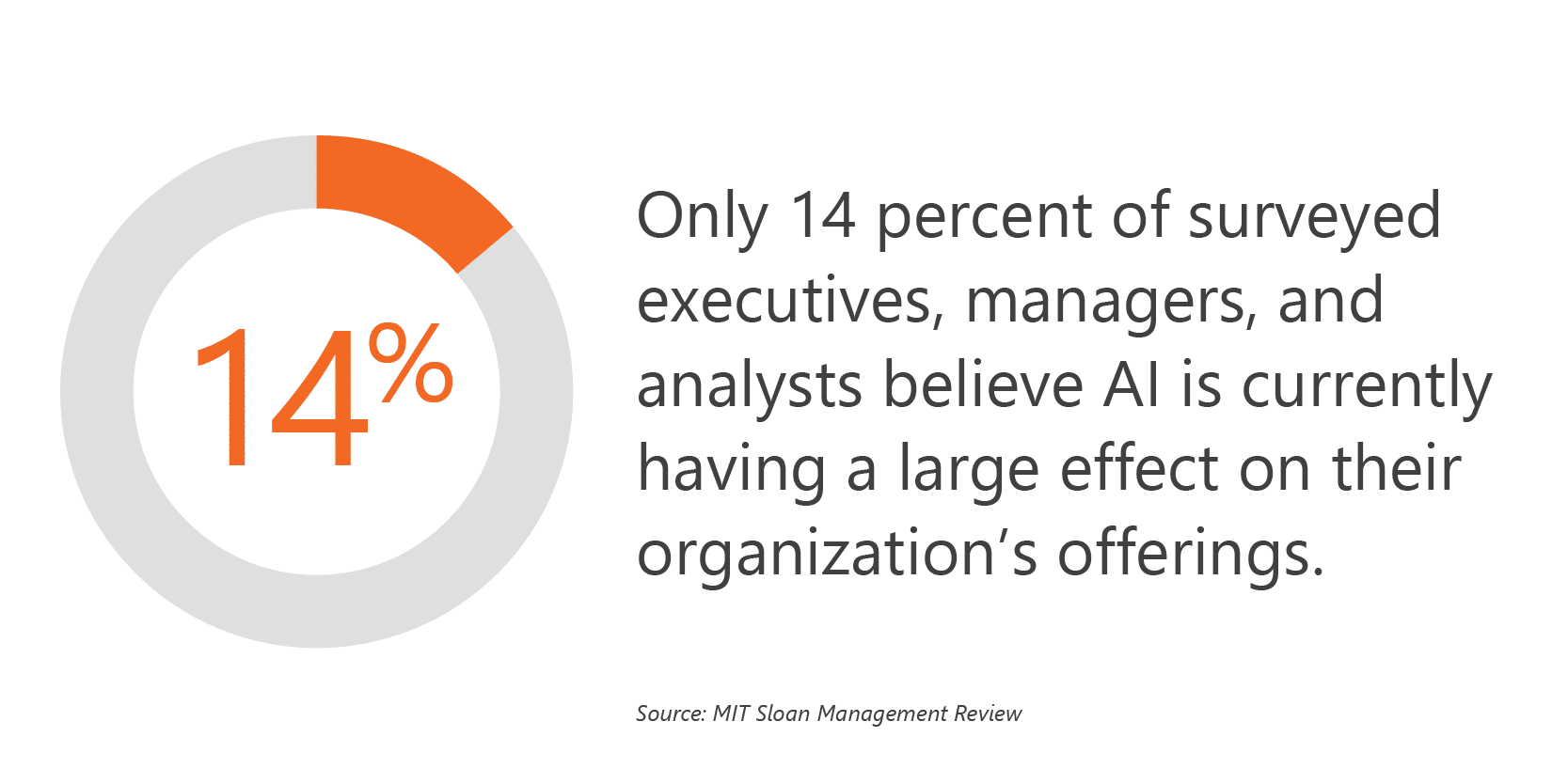

Companies are feeling the pressure. According to a study by MIT Sloan Management Review, only 14 percent of surveyed executives, managers, and analysts believe AI is currently having a large effect on their organization’s offerings. Meanwhile, AI frontrunners like Meta are reportedly spending $100 million per year to attract top research talent. The gap between ambition and implementation has never been wider.

While some organizations race to scale their AI capabilities, others are still hesitating, citing unresolved questions around data privacy, explainability, and model misuse. These fears aren’t unfounded, but they can’t be an excuse for inaction. The solution isn’t to slow down. It’s to govern better.

So how do you operationalize AI governance in a way that accelerates innovation and ensures safety?

Article continues below.

TOOL

AI adoption is accelerating across teams, but are your policies, data practices, and oversight keeping pace? Use our AI Governance Readiness Checklist to pinpoint where you’re exposed and where you’re prepared.

Invert, always invert: A mental model for AI safety

One of the most effective ways to think about AI governance comes from an unexpected place: a German mathematician named Carl Jacobi, by way of investor Charlie Munger. Munger famously advised, “Invert, always invert”—meaning, if you want to achieve a good outcome, first define how to ensure a bad one, and then avoid those steps.

Let’s apply that to AI.

How do you ensure that your company doesn’t use AI safely?

- Don’t understand the risks that stem from AI.

- Don’t have AI safety policies in the first place.

- Don’t foster discussions about AI safety. Maintain silos.

- Don’t make it easy to comply with AI safety policies. Ensure shadow IT.

Once the failure modes are understood, the path forward becomes straightforward:

- Consider the risks that stem from AI.

- Maintain clear policies concerning AI safety.

- Break down siloes and foster discussions surrounding new and emerging threats and best practices.

- Encourage easy monitoring and compliance.

Putting it all together: Governance that scales

AI safety is not just about technology; it’s a socio-technical challenge. It requires aligned people, processes, and technologies, guided by a governance structure designed to evolve alongside the systems it supports.

Here’s what that looks like in practice:

- Start with a strong charter. Define the purpose, scope, and goals of your AI governance program.

- Secure executive sponsorship. Leadership buy-in is essential to break down barriers and signal organizational commitment.

- Balance centralization and decentralization. Policies should be standardized where necessary but allow flexibility based on use-case complexity and risk.

- Invest in enablement. Training, office hours, and regular communications help embed safety into everyday operations.

- Make it easy. As Nobel laureate economist Richard Thaler once said, “If you want people to do something, make it easy.” This principle applies to every aspect of responsible AI deployment, from submitting model reviews to accessing risk playbooks.

Closing thoughts

Governance is not about slowing innovation. It’s about unlocking it safely. Moving from high-level AI principles to practical, actionable policies is the next frontier. The companies that get this right will not only move faster, but with greater trust, resilience, and long-term impact.

Accelerate innovation with AI-powered solutions

Logic20/20 helps you turn artificial intelligence into a competitive advantage through strategic planning and responsible implementation. Our experts deliver actionable insights and real-world value through:

- AI strategy & use case development

- Machine learning model design

- Responsible AI frameworks

- AI program implementation & scaling