4-minute read

Quick summary: The rapid rise of ChatGPT was a wakeup call—and an opportunity—for leaders to implement the principles of responsible AI, ensuring that AI-driven applications are developed responsibly, ethically, and transparently.

Artificial intelligence (AI) has been with us since the 1950s, and even generative AI finds its origins in the earliest chatbots of the 1960s. So when ChatGPT introduced generative AI (Gen AI) into the public arena in November 2022, it offered no new technologies; rather, it aggregated long-standing capabilities into a near-irresistible new package—a conversational intelligence tool that any user can access via any browser at no cost.

As ChatGPT and other Gen AI applications captured the public imagination, it became clear that these tools have potential as both powerful productivity drivers and minefields of risk, particularly once OpenAI made the application available to development teams via APIs.

The disruption that ChatGPT created in the business world points to the larger issue of how organizations can benefit from AI while setting up guardrails against issues such as data privacy breaches and unintentional biases. The answer is a responsible AI governance program, which enables organizations to bring generative AI programs into the fold of IT and allow usage within carefully designed parameters.

Why responsible AI governance is important

Responsible AI governance creates a regulatory framework around the organization’s use of artificial intelligence to ensure that Gen AI and similar tools are used in a responsible, ethical, and transparent manner. Specifically, responsible AI governance helps businesses avoid pitfalls in the following areas:

Security

Unsecured models and data sources leave companies at risk of data leakage and theft, threatening to expose customer and company secrets.

Privacy

Improperly designed models may disregard customer consent and may violate data privacy laws or damage the company’s reputation.

Legal and compliance

Illegal or non-compliant models may subject companies to significant monetary penalties.

Operations

Improperly tested and deployed models may perform adversely, and improperly monitored models may degrade over time, resulting in significant operational challenges.

Ethics

Models that have not properly been screened for bias may harm or discriminate against customers or employees, resulting in significant loss of reputation and legal liability.

ASSESSMENT

Evaluate your AI readiness across five key areas: strategy alignment, data foundations, governance, talent, and operational integration.

The 5×5 AI Readiness Assessment reveals where your organization stands today and delivers a personalized report with recommended next steps.

Key principles of AI responsibility

Implementing responsible AI requires a commitment to core principles that ensure systems are ethical, equitable, and secure. These principles guide organizations in developing AI solutions that deliver benefits while minimizing risks.

Fairness and inclusiveness

AI systems must treat all users equitably, avoiding discrimination based on factors like gender, ethnicity, or socioeconomic status. For example, biased hiring tools can unfairly exclude qualified candidates, and loan approval algorithms may deny credit to certain groups due to skewed training data. Organizations can leverage fairness assessment tools to help identify and mitigate bias, helping developers build systems that promote equity and inclusivity.

Reliability and safety

Safe, reliable AI systems are essential for building trust and ensuring consistent performance, even under unforeseen conditions. For instance, an AI-powered vehicle must operate safely during extreme weather or technical glitches. Similarly, AI systems must be resilient to manipulation or adversarial attacks. Developers can use error analysis tools to monitor and improve system reliability, ensuring that AI solutions meet high standards for safety and dependability.

Transparency and accountability

Transparency in AI decision making is crucial, especially in areas like healthcare and finance, where decisions can significantly impact lives. Tools like responsible AI scorecards enhance interpretability, enabling stakeholders to understand how and why decisions are made. By fostering accountability, these tools empower organizations to take responsibility for AI outcomes and build trust with users.

Privacy and security

Protecting user data is a cornerstone of responsible AI. AI systems must comply with privacy laws and regulations such as GDPR and CCPA to avoid inadvertently exposing sensitive information. For instance, poorly designed models can reveal confidential data during predictive analysis. Organizations should implement robust safeguards to secure data against breaches and attacks, ensuring that AI solutions respect user privacy and maintain regulatory compliance.

TOOL

AI adoption is accelerating across teams, but are your policies, data practices, and oversight keeping pace? Use our AI Governance Readiness Checklist to pinpoint where you’re exposed and where you’re prepared.

Responsible AI examples

Responsible AI in action showcases how organizations can harness AI ethically and effectively. Here are two examples illustrating its practical application:

Example 1: Fairness in hiring processes

Say a global enterprise leverages an AI-powered hiring platform to streamline talent acquisition. Initially, the system exhibits bias against candidates from underrepresented backgrounds due to historical data skewed by past hiring trends. By incorporating fairness assessment tools, the organization can identify and mitigate biases in the algorithm to ensure a more inclusive hiring process, resulting in a diverse workforce while maintaining efficiency.

Example 2: Transparency in medical diagnostics

A healthcare provider might adopt an AI tool for diagnosing rare diseases to improve detection rates and accelerate treatment timelines. Recognizing the need for transparency, they integrate interpretability tools like a responsible AI scorecard. This allows physicians to understand the reasoning behind AI recommendations and ensures they can confidently communicate decisions to patients. By prioritizing transparency and accountability, the provider enhances both patient trust and diagnostic accuracy.

Creating a responsible AI governance board

Successful governance of any capability requires a standing governing body, and AI is no exception. A responsible AI governance board can

- Establish AI ethics, privacy, and security standards

- Set project charters and KPIs

- Oversee and approve model production

It’s important to assemble a cross-functional board that includes a diverse representation of business concerns relevant to AI safety, including security, privacy, and corporate ethics.

Building a responsible AI governance board is a multi-phase process that requires careful consideration at every turn, as the following chart illustrates:

- Define purpose and goals: Understand what roles the board will play in decision-making, strategic planning, oversight, and governance.

- Identify needed skills and expertise: Determine the skills, knowledge, and expertise required on the board to achieve the organization’s goals effectively.

- Develop board policies and procedures: This phase could include rules on board member selection, term limits, conflict-of-interest policies, meeting frequency, and decision-making processes.

- Recruit board members: Look for individuals who are committed to the organization’s mission and have a diverse representation of business units across the business.

- Formalize governance structure: Create and adopt formal governance documents, such as the organization’s bylaws or constitution.

- Develop strategic plan: Work with the board to develop a plan for executing on objectives and strategies.

- Monitor and evaluate performance: Regularly assess the board’s performance, its effectiveness, and the organization’s progress towards its goals. Tool selection is a key sub-phase here.

- Review and adapt: Periodically review the board’s structure, processes, and policies to ensure they remain relevant and effective in meeting the organization’s needs.

Incorporating responsible AI governance processes

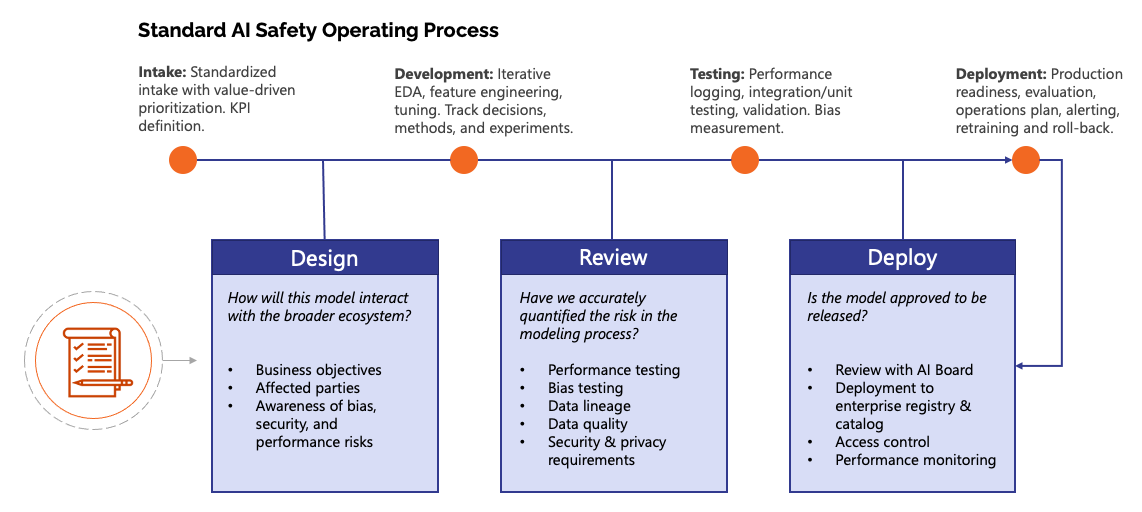

The responsible AI governance process makes safety a standard of practice in the data science lifecycle. Here we’ve illustrated an example of how governance considerations can integrate into the development, testing, and deployment of AI models:

Challenges in implementing a responsible AI framework

Organizations aiming to implement a responsible AI framework can face numerous challenges, both technical and ethical. Ensuring fairness, maintaining transparency, and adhering to privacy regulations require thoughtful strategies and robust tools.

Data security

Developers must navigate stringent regulations like GDPR while balancing the need for actionable insights. For instance, models trained on sensitive datasets risk exposing private information, even unintentionally. Tools can help address this by enabling differential privacy, allowing organizations to use data effectively while safeguarding individual identities. To learn more about strategies for ensuring data privacy in AI systems, explore our dedicated resource.

Bias in AI systems

AI systems can perpetuate and even amplify bias when trained on skewed or incomplete datasets. For example, biased datasets used in criminal justice algorithms have led to unfair sentencing decisions, disproportionately affecting certain groups. Reducing such biases requires using representative data, conducting rigorous testing in diverse environments, and employing fairness assessment tools. Failure to address bias can erode trust, perpetuate inequalities, and lead to reputational damage.

Preparing for the future with responsible A

ChatGPT and other generative AI platforms have revolutionized how users interact with technology, offering immense productivity benefits while introducing significant risks. For leaders, the challenge lies in managing these risks through a robust governance framework that prioritizes the principles of responsible AI—fairness, transparency, reliability, and data security.

By implementing a governance program that addresses AI in all its forms and use cases, from business users conducting research to development teams integrating AI into products, organizations can navigate today’s challenges with confidence and prepare for the intelligent tools of the future. Responsible AI isn’t just about mitigating risks—it’s about building a foundation for sustainable innovation.

Put your data to work

We bring together the four elements that transform your data into a strategic asset—and a competitive advantage: