8-minute read

AI is everywhere, but impact is not. Enterprises are racing to integrate artificial intelligence into their operations, yet many find themselves stuck in pilot mode or pushing projects into production only to see them underperform.

According to Forrester, up to 90 percent of AI pilots never reach production, underscoring the deeper issue that technology alone doesn’t drive results. Even among projects that do move forward, only 4 percent yield significant returns on investment. Most either stall or underdeliver.

What separates stalled initiatives from value-driving solutions isn’t just access to advanced tools. It’s culture. The organizations pulling ahead are those that foster an environment where experimentation is the norm and where teams are empowered to question, test, and reimagine how work gets done.

In this article, we’ll explore how senior leaders can build and scale a culture of AI experimentation by focusing on the structures, mindsets, and practices that turn curiosity into impact.

Table of contents (click to expand)

- From pilot purgatory to progress: Making experimentation a core capability

- Build the scaffolding for creative AI use

- Empower influencers in the gap between strategy and execution

- Build a “fence around the playground” with structure and guardrails

- Reach the quiet corners: Don’t assume silence equals success

- Measure what matters—beyond pilot counts

- The real differentiator

PLAYBOOK

We will never sell your data. View our privacy policy here.

From pilot purgatory to progress: Making experimentation a core capability

Launching an AI pilot is only step one. Sustaining momentum and translating early tests into business value is the much harder journey that follows. Many organizations fall into “pilot purgatory,” where projects start with enthusiasm but stall before they can scale. Even initiatives that reach production can falter without the cultural support needed for ongoing iteration and improvement.

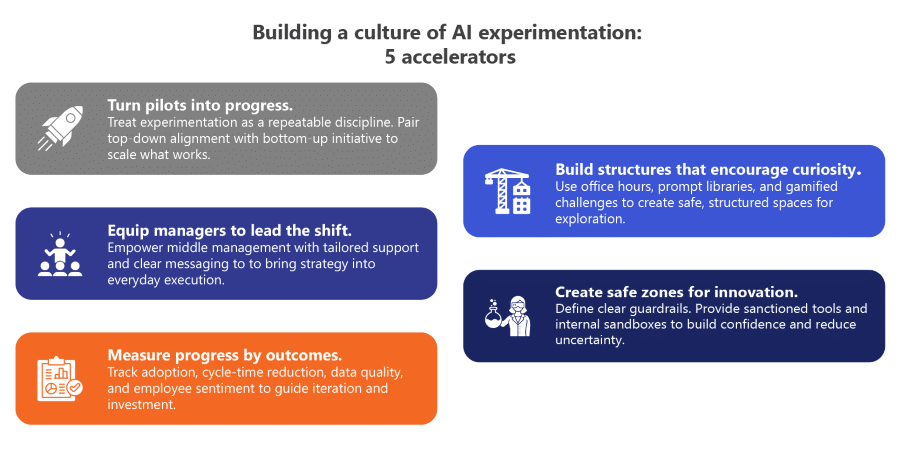

AI experimentation should be treated as a repeatable organizational discipline. It delivers the most value when integrated into the ways teams solve problems, make decisions, and improve processes over time. This approach requires clear structures and incentives that support continuous learning. The goal isn’t simply to prove that AI works, but to foster a culture that learns, adapts, and builds confidence with every iteration.

Despite strong initial momentum, most initiatives never cross the threshold into scalable value. The average company manages 33 AI pilots but only successfully scales four, pointing to deeper gaps in how organizations structure, incentivize, and sustain experimentation.

Progress accelerates when top-down commitment meets bottom-up initiative. Executive leaders can spark momentum by modeling curiosity, funding small-scale experiments, and aligning experimentation with business priorities. At the same time, teams on the ground need the freedom, tools, and psychological safety to explore use cases that matter to them. When both elements are in place, organizations create a cycle of testing and learning that drives scalable impact.

Build the scaffolding for creative AI use

Encouraging experimentation means creating the right conditions for curiosity to drive action. When organizations build intentional structures for learning, sharing, and exploring, they make it easier—and safer—for employees to try new things.

Create space for ongoing dialogue

Engagement channels such as office hours, discussion forums, and drop-in labs provide space for employees to exchange ideas, troubleshoot challenges, and showcase early wins. At Logic20/20, internal office hours and community forums have served as a successful model for fostering open discussion across teams with varying levels of AI fluency.

These forums must be actively facilitated to stay relevant. Without guidance and outreach, even well-intentioned channels can go quiet over time. Rotating hosts, sharing real use cases, and incorporating leadership drop-ins can help keep conversations productive and energized.

Equip teams with shared starting points

Knowledge-sharing tools like prompt libraries or prompt competitions help teams build on one another’s progress. At Logic20/20, we’ve supported clients in launching engagement campaigns, including “prompt of the week” or “prompt bingo,” to inspire creativity, encourage reuse, and scale learnings from early adopters.

Instead of starting from scratch, employees can adapt proven prompts and workflows to suit their own needs. These resources accelerate experimentation and reinforce community-based learning.

Use gamification to unlock creative thinking

Gamification adds energy and engagement to the experimentation process. Unlike traditional training, which focuses on step-by-step use, gamified programs reward exploration, collaboration, and creative problem-solving. By encouraging teams to discover new use cases and rethink existing tasks, gamification turns experimentation into a shared pursuit that drives real momentum across business units.

AI workforce enablement: The missing link in your AI strategy

Learn why workforce enablement is critical to AI success, what it looks like in practice, and how to build a strategy that aligns people and technology from day one.

Empower influencers in the gap between strategy and execution

Senior leaders may define the vision, but middle managers are responsible for translating it into day-to-day execution. In building a culture of AI experimentation, this “middle layer” plays a pivotal role. These managers shape team priorities, reinforce expectations, and influence how new ideas are adopted across functions.

Use empathy mapping to uncover motivators and blockers

To engage middle managers effectively, organizations need to understand their point of view and help them effectively engage their teams. Empathy mapping is a useful tool for uncovering what these individuals and their teams are seeing, hearing, thinking, and feeling in their day-to-day roles, using questions such as:

- Are they under pressure to deliver short-term results?

- Are they unsure how to assess AI tools?

- Are they fielding questions from their teams without clear guidance?

By mapping out these perspectives, leaders can better identify barriers to experimentation—and design more targeted support.

Tailor outreach based on manager personas

Not all managers are motivated by the same things. Some will be energized by the novelty of AI and eager to experiment. Others may hesitate without a clear connection to team goals, KPIs, or job expectations. Outreach and enablement strategies should reflect these differences. For some personas, a how-to workshop will be enough. For others, aligning experimentation with performance metrics or risk reduction will be essential.

Managers who feel equipped and supported can act as amplifiers, not gatekeepers. With the right tools and messages, they become active enablers of innovation, helping to scale experimentation where it matters most.

Article continues below.

Build a “fence around the playground” with structure and guardrails

Even the most capable teams may hesitate to experiment with AI if they fear breaking policy, misusing data, or introducing risk. When guidelines are unclear, uncertainty tends to outweigh curiosity, and these concerns are well-founded. A recent study found that 94 percent of CEOs believe their employees are using generative AI tools without company approval or oversight. At the same time, only 25 percent of organizations report having a fully implemented AI governance program, leaving teams to navigate new technologies without clarity or confidence. Organizations that want to foster responsible experimentation must reduce ambiguity and provide safe, supported pathways for testing new ideas.

Establish clear, actionable governance

Employees are more likely to experiment when they understand the boundaries. Governance frameworks should outline which tools are approved, what data can be used, and how results will be reviewed. This governance must be embedded in day-to-day workflows and communicated in accessible, role-specific ways to provide employees with a clear path for safe use.

Provide safe, supported environments

Sanctioned tools and internal sandboxes can help teams build confidence by removing technical and compliance barriers. A well-designed environment gives employees room to explore, while keeping experimentation aligned with organizational standards.

Support structures such as centers of excellence or innovation offices can offer additional value. For example, we recently worked with a utility to establish a center of excellence that empowers employees to drive AI use cases from idea to implementation. In return, the office provides architecture templates, coaching, structure, and guidance on defining KPIs. The process encourages ownership while helping teams deliver measurable results.

Move from passive use to active experimentation

When employees have the tools, guidance, and support to act, they shift from passive consumers of AI to active contributors. With the right supportive structures in place, experimentation becomes less about risk and more about opportunity.

Reach the quiet corners: Don’t assume silence equals success

A culture of experimentation can’t rely solely on visible enthusiasm. While some employees readily share ideas and dive into new tools, others may hesitate as they are—unsure where to start, uncertain of expectations, or simply unaware of available support. Without deliberate outreach, these quiet corners of the organization risk being left behind.

A recent LinkedIn study found that 35 percent of professionals feel nervous discussing AI at work, often fearing they’ll sound uninformed; another 33 percent admit feeling embarrassed by how little they understand. These quiet blockers often go unnoticed unless leaders actively create space for questions and guided exploration.

Track where engagement is lagging

Adoption metrics can reveal more than just usage rates. They can point to teams, departments, or roles that may be hesitant to experiment—or are struggling to find relevance in the tools provided. By reviewing usage data at a team or function level, leaders can identify where additional engagement may be needed.

Follow up with support, not scrutiny

Silence doesn’t always signal disinterest. In many cases, it reflects uncertainty or a lack of enablement. Targeted outreach—such as check-ins, listening sessions, or peer-led demos—can uncover hidden barriers and clarify next steps. The goal isn’t to enforce participation, but to create space for it by addressing unmet needs.

Measure what matters—beyond pilot counts

When experimentation becomes part of the culture, measurement needs to evolve alongside it. Counting the number of pilots launched may look like progress, but it rarely tells the full story. Without meaningful metrics, organizations risk confusing activity with impact.

Shift focus from activity to outcomes

High-performing organizations track the signals that indicate whether experimentation is creating value, improving processes, or informing decisions. Meaningful measurement separates isolated wins from scalable impact. Useful metrics include:

- Voluntary adoption: Are teams choosing to use AI tools outside of mandated programs?

- Cycle-time reduction: Are tasks being completed faster or more efficiently as a result of experimentation?

- Data quality: Is the quality and completeness of data improving (e.g., fewer gaps, cleaner fields) to support scalable AI use?

- Employee perceptions: Do employees feel that AI is helping them to create quality work or are they feeling that AI-generated “workslop” makes it difficult to see true productivity gains?

Position experimentation as a smart investment

Not every experiment will succeed, but each one should offer insights. When framed as a cost-effective way to accelerate learning, experimentation becomes a strategic asset that generates insight, reduces uncertainty, and helps avoid costlier missteps later.

Leaders who prioritize the right metrics can scale what works, refine what doesn’t, and demonstrate tangible progress over time. This reframing also helps leaders make a stronger case for continued investment, especially in environments where budgets are tight or results are closely scrutinized.

The real differentiator

The true test of AI readiness goes beyond the tech stack to explore how the organization responds when the answers aren’t obvious. A culture of experimentation creates room for that ambiguity. It shifts the conversation from “What tool should we deploy?” to “What’s worth trying, and what are we learning?”

In a landscape defined by rapid change and increasing pressure to deliver results, leaders who make space for exploration signal something important: that progress is not only measured by outcomes, but also by the questions an organization is willing to ask. Over time, those questions become sharper, the risks more calculated, and the wins more scalable.

Building a culture of AI experimentation doesn’t happen overnight. But the organizations that invest in it now will be the ones that move with greater confidence, clarity, and speed—no matter how AI and the business landscape continue to evolve.

You might also be interested in …

How Workday builds the foundation for AI

When treated as a platform strategy, Workday gives leaders the clarity to rein in OpEx and build the foundation for agentic AI.

The CHRO’s Guide to AI Workforce Enablement

Practical strategies for closing the adoption gap and turning AI investment into long-term business advantage

Beyond the POC: The five control planes of enterprise AI

5 control planes of AI that help organizations enforce safeguards, standardize operations, and ensure reliability at scale

ASSESSMENT

Evaluate your AI readiness across five key areas: strategy alignment, data foundations, governance, talent, and operational integration.

The 5×5 AI Readiness Assessment reveals where your organization stands today and delivers a personalized report with recommended next steps.