Executive summary: As enterprises move beyond isolated AI pilots, many find that success in production depends not on algorithms but on the infrastructure and discipline around them. This article introduces five control planes that help organizations enforce safeguards, standardize operations, and ensure reliability at scale. The framework offers practical guidance on intake, risk review, performance monitoring, and adoption, offering leaders a framework for moving from prototypes to enterprise services with confidence.

7-minute read

Across industries, AI pilots often deliver quick wins, but production is where most initiatives falter. Up to 90 percent of pilots never scale, and 60 percent of AI delays trace back to data and process gaps rather than model performance. The barrier isn’t innovation—it’s the absence of an operating backbone to make that innovation reliable, governed, and cost-effective. As enterprises shift from experimentation to deployment, building that backbone becomes essential. In this article, we explore five control planes that offer a framework for scaling AI into sustainable enterprise service.

Why POCs stall: The missing backbone

Proving value in a pilot is relatively easy; sustaining it in production is not. Without an operating backbone, most enterprises struggle to carry early wins forward. The most common failure? Lack of sustained leadership commitment. Without executive sponsorship and accountability, AI initiatives lack the funding, visibility, and governance required to scale.

Additional pitfalls include intake processes that flood the pipeline with uncoordinated experiments, governance frameworks bolted on too late, and the absence of reliability targets such as service-level objectives (SLOs). Layer on unchecked API costs and fragmented oversight of security and compliance, and even strong pilots can collapse.

Enterprises that move from POCs to impactful deployments take a disciplined approach. They consolidate intake through a single channel, apply a common rubric for scoring risk and return, and enforce guardrails that govern how projects advance.

These practices channel resources into the use cases most likely to scale, rather than scattering effort across experiments that will never reach production.

Article continues below.

Created for CIOs, our playbook outlines 5 imperatives for scaling AI successfully—and sustainably—across the organization.

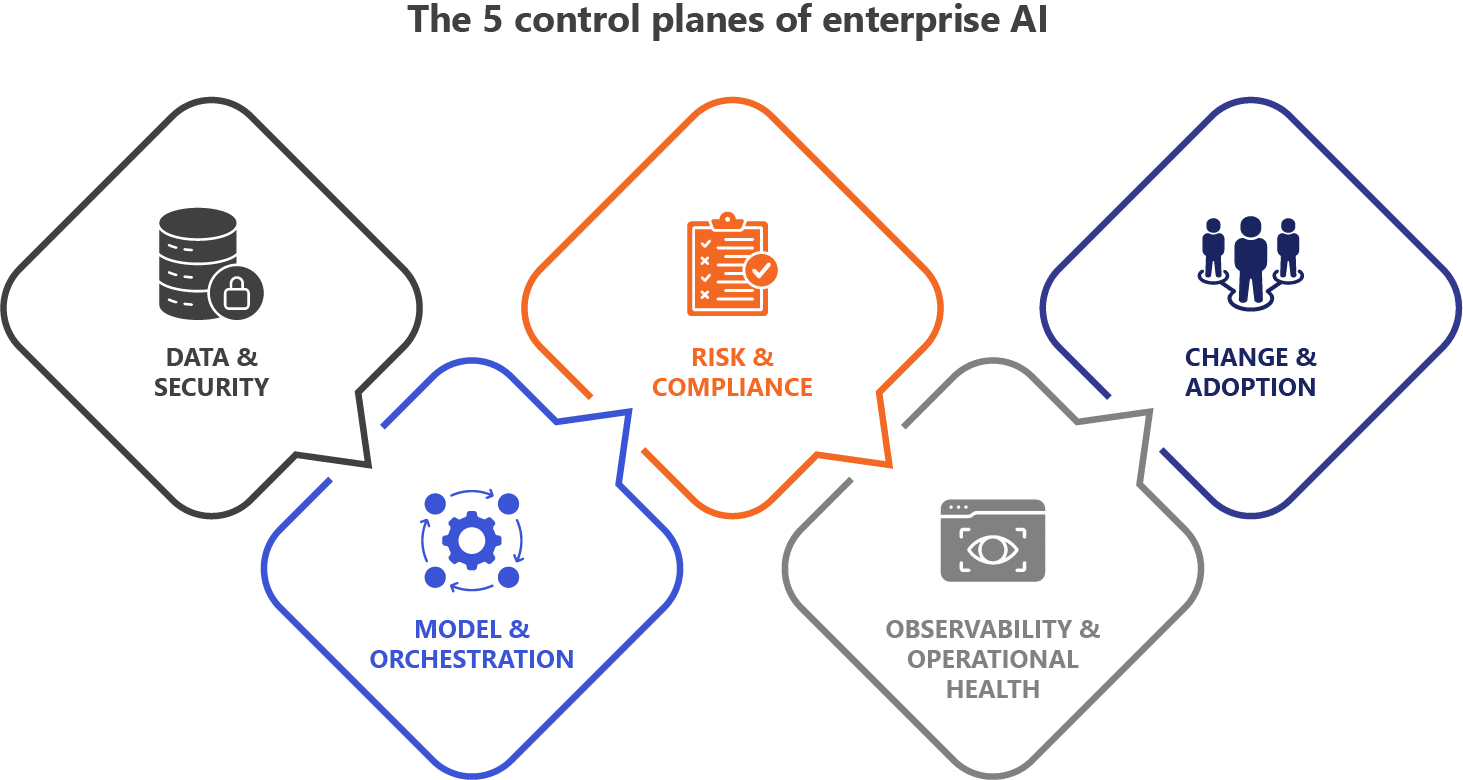

The five control planes of enterprise AI

Scaling AI requires a framework that ensures reliability, security, and measurable value across the lifecycle. The five control planes provide that framework with distinct but interconnected layers that govern data, orchestration, risk, performance, and adoption.

1. Data and security plane

Data and security form the foundation of any scalable AI program. Pilots often succeed using curated datasets, but those conditions rarely hold in production. When data is flawed, incomplete, or inconsistent, AI systems replicate and often magnify the underlying issues. High-performing models cannot compensate for poor data quality.

As AI adoption increases, the supporting data environment must mature in parallel. Enterprises need to improve not only data quality and governance, but also the structure and availability of new inputs that AI systems can interpret and apply. Rather than chasing complete coverage across all systems, successful programs begin by governing the data that supports the most critical, high-volume processes. Focusing on this high-value subset reduces compliance risk and creates a scalable foundation for future growth.

Action items

- ✅Classify sensitive fields (PII, financials, health data, etc.) and tag them for compliance tracking.

- ✅Document data lineage so teams can trace outputs back to sources when questions arise.

- ✅Assign data owners with authority to enforce stewardship policies.

- ✅Implement dashboards to monitor quality and flag anomalies in real time.

- ✅Restrict permissions once assets are promoted to production, ensuring changes are deliberate and auditable.

2. Model and orchestration plane

Pilots often succeed as tightly managed one-offs, but scaling demands consistency. AI services must follow shared patterns for invocation, supervision, and improvement. Without orchestration standards, enterprises end up with a patchwork of prompts, retrieval methods, and routing logic that leaders cannot monitor or govern. Uniform practices enable evaluation and promotion, ensuring only validated assets reach production.

Action items

- ✅Standardize how prompts, retrieval strategies, and routing are structured across teams.

- ✅Maintain a service or capability registry so models, tools, and agents can be tracked and reused.

- ✅Apply version control to prompts and templates to prevent drift and confusion.

- ✅Require peer review before models move forward, with documented criteria for reproducibility.

- ✅Define a clear promotion path—from sandbox to staging to production—with documented gates and rollback procedures.

AI services must follow shared patterns for invocation, supervision, and improvement.

3. Risk and compliance plane

The move from pilot to production raises the stakes. What began as a low-risk experiment can now send customer communications, execute financial transactions, or process sensitive data at scale. Without structured review, enterprises face runaway costs, data exposure, and reputational damage. To mitigate these risks, every deployment should pass through a pre-production gate—an Agent Risk Review—to confirm it is deliberate, compliant, and accountable.

The review should address:

- Business scope: Is the agent’s purpose clearly defined and aligned with organizational goals?

- Data safeguards: Are sensitive fields protected (e.g., via masking, encryption, tokenization), and is lineage documented for traceability?

- Security: Does the design respect least-privilege principles and access controls?

- Safety: Are prohibited behaviors blocked, with a kill switch in place?

- Evaluation: Have offline tests and peer reviews been completed, with results logged?

- Operations and cost: Who manages uptime, SLOs, rollback readiness, and budget consumption?

- Compliance: Does the deployment meet all legal and regulatory requirements?

Action items

- ✅Make the Agent Risk Review a mandatory pre-production step.

- ✅Establish guardrails that require sign-off from designated stakeholders before deployment.

- ✅Log all review outcomes for transparency and audit readiness.

- ✅Conduct peer reviews of the agent design to validate risk safeguards, security posture, and projected cost impact.

- ✅Re-run the review when material changes occur, with “material” defined according to pre-established organizational criteria.

TOOL

AI adoption is accelerating across teams, but are your policies, data practices, and oversight keeping pace? Use our AI Governance Readiness Checklist to pinpoint where you’re exposed and where you’re prepared.

4. Observability and operational health plane

Without visibility, enterprises risk degraded performance, rising costs, and weak adoption that quietly erode business value. Treating AI as a critical service requires clear SLOs, active monitoring, and metrics that link reliability to real-world outcomes.

As AI deployments grow, teams must implement structured observability practices that track performance, user engagement, and the operational cost of scaled usage.

Key dimensions to monitor include:

- Business scope: Is the agent’s purpose clearly defined and aligned with organizational goals?

- Uptime: Is the service consistently available to end users?

- Error budgets: What level of failure is tolerable, and how is it enforced?

- Cost per task: Are unit economics sustainable as workloads grow?

- Adoption: Are users voluntarily choosing the AI service over alternatives?

Action items

- ✅Build dashboards that surface reliability, latency, cost, and adoption metrics in real time.

- ✅Run rollback drills to confirm services can be restored quickly when issues arise.

- ✅Freeze releases automatically when error budgets are exceeded.

- ✅Publish reports that keep stakeholders aligned on cost and performance.

- ✅Quarantine or retrain models when drift or runaway costs are detected.

5. Change and adoption plane

Scaling AI requires both top-down governance and grassroots engagement. A lightweight AI Center of Excellence (CoE) provides centralized oversight, while business units drive local rollout. The CoE defines standards, manages intake and prioritization, and ensures governance. A champions’ network spreads literacy, accelerates uptake, and surfaces feedback from the front lines. Together, these mechanisms embed AI into everyday work.

Action items

- ✅Establish an AI CoE with representation from business, technical, risk, and compliance teams.

- ✅Create a central intake form and evaluation framework to rank use cases consistently.

- ✅Maintain prioritization dashboards that give leadership visibility into the pipeline.

- ✅Build a champions’ network across business units to serve as local advocates and problem-solvers.

- ✅Implement a regular communication cadence (e.g., newsletters, spotlight stories, internal demos) to reinforce adoption.

- ✅Assign clear accountability: The CoE owns communication and governance, while business units manage rollout and ongoing use.

Leading the way: Executive decisions for scaling with impact

The five control planes provide the structure, but leadership commitment determines whether that structure holds. Across industries, AI pilots often falter not due to technical shortcomings, but because executive sponsorship fades after the initial prototype. When leadership fails to prioritize funding, governance, and accountability, even high-potential pilots collapse under the weight of ambiguity and drift.

Executives set the tone through decisions on governance, ownership, release discipline, and measurement. These choices determine whether AI matures into a dependable enterprise capability or fragments into isolated experiments.

Define the governance model

Decide what to centralize and what to delegate. Data stewardship and evaluation assets often require central oversight, while prompt libraries and workflow refinements can be managed by business units.

Assign ownership

Hold specific leaders accountable for risk reviews and monitoring. A CoE can set standards, but named executives must own uptime, budgets, and rollback readiness.

Enforce release discipline

Link release velocity to reliability. When a service exceeds its error budget, pause feature rollouts until stability improves.

Standardize the metrics canon

Track a consistent set of measures across all AI services:

- Adoption: Voluntary use over alternatives

- Cycle-time delta: Speed of processes compared to baseline

- Green-data coverage: Percentage of mission-critical data fields meeting quality standards

- Regret spend: Capital tied up in pilots that should have been retired

From pilots to performance

Scaling AI is not just a technical upgrade. It is an organizational test of discipline and resolve. Enterprises that effectively scale AI run it as a managed service—governed, accountable, and built for adoption. The five control planes provide the structure, but impact depends on how decisively leaders put them into practice.

Leaders must be willing to pause when reliability falters, retire pilots that drain resources, and empower champions across the organization to drive change. Competitors are not waiting for perfect conditions, and neither should you.

The real question is no longer whether AI pilots prove value. They already do. The question is whether the enterprise has the operating backbone to turn that value into impact at scale.

ASSESSMENT

Evaluate your AI readiness across five key areas: strategy alignment, data foundations, governance, talent, and operational integration.

The 5×5 AI Readiness Assessment reveals where your organization stands today and delivers a personalized report with recommended next steps.