Executive summary: Agentic AI is reshaping automation, moving beyond rules and scripts to systems that anticipate needs and act with autonomy. But scaling too quickly without the right foundations can lead to stalled pilots, compliance gaps, and operational risk. This guide outlines six critical steps every organization must take to get automation ready for agentic AI, from defining use cases and strengthening data foundations to building governance and scaling responsibly.

10-minute read

Automation is evolving fast. What began as rules-based workflows and robotic process automation (RPA) is now expanding into agentic AI: systems capable of understanding goals, reasoning about context, and acting without constant human guidance.

Unlike traditional automation or even standard generative AI, agentic systems are goal-driven and proactive. They don’t just respond to instructions; they anticipate needs, plan next steps, and adapt to new information in real time.

This shift opens powerful new possibilities, but also raises pressing questions. Is your organization ready to deploy agents that act independently? Are your processes, data, and governance structures mature enough to support them?

Whether or not your organization is actively pursuing agentic AI, it will soon show up in the applications your teams already use. Yet most enterprises still lag in governance, maturity, and readiness, creating risks of shadow AI, compliance gaps, and stalled pilots.

We wrote this guide for leaders and teams trying to move from hype to impact with agentic AI:

- Business and technology leaders evaluating how AI fits with strategy and risk appetite

- IT and operations teams charged with implementing automation securely and at scale

- Business users and innovators experimenting with AI and automation to improve their workflows

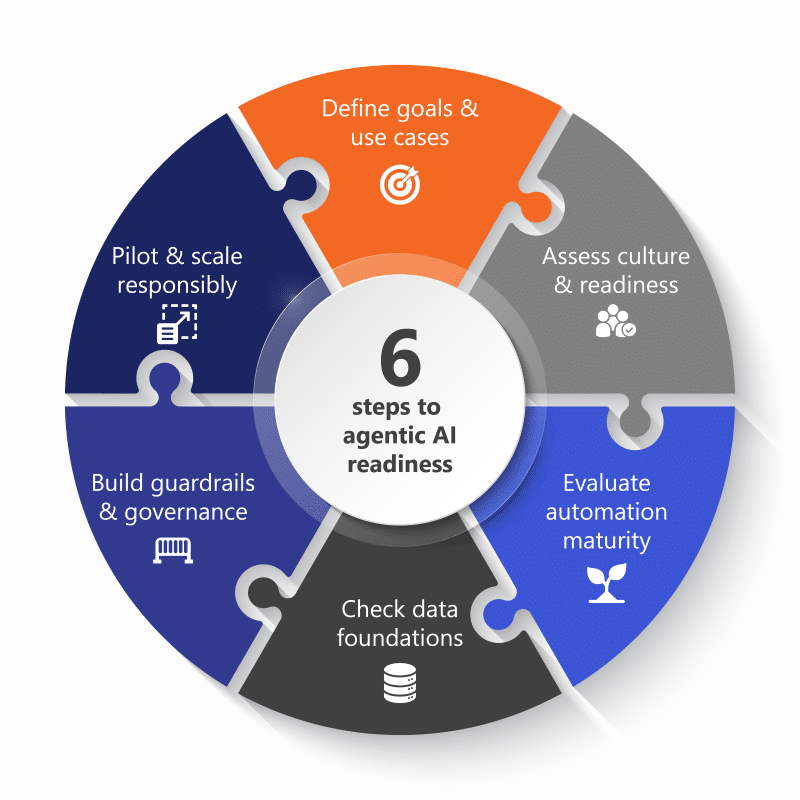

Preparing for agentic AI requires more than technology. It demands clarity of purpose, cultural alignment, mature processes, strong data foundations, and built-in governance. The following six steps provide a practical roadmap to assess readiness and scale responsibly, ensuring that experimentation translates into lasting impact.

The goal isn’t to slow down experimentation. It’s to ensure that scaling happens on a foundation of trust and readiness.

Table of contents (click to expand)

- Step 1: Define goals and use cases

- Step 2: Assess cultural and organizational readiness

- Step 3: Evaluate technical and automation maturity

- Step 4: Check data foundations

- Step 5: Build in guardrails and governance from day one

- Step 6: Pilot and scale responsibly

- Final checklist

- The path forward with agentic AI

Created for CIOs, our playbook outlines 5 imperatives for scaling AI successfully—and sustainably—across the organization.

Step 1: Define goals and use cases

The first step in getting ready for agentic AI is simple but often overlooked: Be clear about what you’re trying to achieve. Without defined goals, projects drift, pilots stall, and it becomes impossible to weigh risks against benefits.

Agentic AI can unlock different kinds of value depending on the use case. Use the following lenses to evaluate potential use cases:

- Efficiency: Streamline repeatable tasks, reduce manual effort, free up teams to focus on higher-value work.

- Compliance and risk management: Monitor regulations, enforce policies, and reduce exposure to errors or fraud.

- Innovation: Test new services, accelerate product development, or experiment with new business models.

- Customer experience: Improve response times, personalize interactions, and scale support without adding headcount.

Balancing risk and benefit

Not all use cases are created equal. Drafting email responses with an agent carries very different risks from monitoring regulatory changes or handling sensitive financial data.

One cautionary tale comes from AI thought leader Simon Willison, who described an experiment in which an agent was given too much autonomy with too little oversight. The result was what he called the “lethal trifecta”: unbounded autonomy, weak governance, and a sensitive task domain. Together, those factors created cascading failures and highlighted the danger of deploying agentic AI without clear guardrails or escalation paths. The lesson: Match oversight and governance to the level of risk.

Questions to ask before moving forward

To define and pressure-test a potential use case, start by asking:

- What are the expected outcomes? (faster cycle times, fewer errors, happier customers, lower costs?)

- What does the team expect the agentic AI to do? (draft responses, route tickets, make recommendations, execute transactions?)

- Is the use case experimental or mission-critical? Is failure an acceptable learning experience, or would it carry reputational or compliance consequences?

- What is the potential value and what are the risks? Are you balancing ROI against risk tolerance, and do you have safeguards in place?

The clearer your goals and use cases, the easier it becomes to decide where to start small, where to double down, and where to hold back.

What is agentic AI? Benefits, challenges, and how to implement it

Learn what agentic AI is, how it is evolving, and practical steps for integrating it into your enterprise strategy.

Step 2: Assess cultural and organizational readiness

Even the best technology fails without the right environment around it. Before rolling out agentic AI, it’s critical to ask whether your organization and your people are ready.

Cultural readiness

Are leaders and employees aligned on what generative and agentic AI can realistically do? Do people expect AI to be part of their daily tools, or is there skepticism and resistance? A culture that embraces experimentation, balanced with clear boundaries, is far more likely to see AI projects succeed.

Shadow AI

In many organizations, employees are already experimenting with AI tools on their own. These grassroots “laboratories” can surface creative ideas, but they also create risks if projects involve sensitive data or unsanctioned tools. Visibility, support, and governance turn shadow AI into safe innovation rather than a compliance headache.

Governance and risk tolerance

Leadership must understand the trade-offs. How much risk is acceptable, and in which domains? Explicit conversations about risk tolerance help teams set the right guardrails early.

Human in the loop

Some use cases can run safely with little oversight, while others require continuous human review. For example, an AI agent assisting with internal knowledge search may operate mostly on its own, while a compliance-monitoring agent should always escalate uncertain results to a human reviewer. As the saying goes, “AI without a human in the loop is like a plane flying on autopilot without a pilot: it works until it doesn’t.” Especially in domains with serious legal or regulatory implications, a human checkpoint is non-negotiable.

Readiness is not only technical. It also depends on culture, governance, and trust. If those foundations aren’t in place, even the most advanced agents will stall before they deliver impact.

Step 3: Evaluate technical and automation maturity

Agentic AI is not plug-and-play. Before scaling, it’s essential to know whether the automation stack and the related processes are ready to support autonomous agents. This evaluation requires a holistic discovery approach, mapping not just current workflows, but also the workarounds, scripts, and partial automations teams may already be using. These shadow processes often reveal both the opportunity for automation and the gaps that need to be addressed.

Automation maturity

Where is the organization today? Many teams are still at the RPA stage, automating simple, rules-based tasks. Others have progressed to workflow orchestration, connecting multiple systems. Few have reached the level of orchestrated AI agents that can reason, escalate, and adapt in real time. RPA still has a place in many business activities, and agentic AI can incorporate those RPA steps into a broader process, delivering more complete end-to-end automation.

AI agent requirements

An effective AI agent must:

- Make decisions: Act on defined goals and data inputs.

- Escalate: Flag or pause when confidence is low or when human judgment is required.

- Use memory: Reference past actions or interactions to avoid starting from scratch every time.

- Integrate: Connect seamlessly with systems, data, and tools.

Without these requirements, solutions may succeed in demos but fail in production.

Process maturity

Even the smartest agent fails if the underlying process is undocumented, inconsistent, or unstable. Start with processes that are well-defined, repeatable, and already measured so that you can evaluate AI’s impact with clear before-and-after metrics.

Prototype to validate feasibility

Don’t jump straight into enterprise-wide deployment. Rapid prototyping exposes technical gaps, integration challenges, and process flaws before major investments are made. Platforms like n8n make it easy to prototype agentic workflows in a safe, low-code environment.

The goal is clarity, not perfection. Taking a holistic discovery approach, mapping current maturity, and stress-testing processes through prototypes will reveal whether the organization is ready for agentic AI or if foundational work is needed first.

Article continues below.

Step 4: Check data foundations

Agentic AI is only as good as the data it runs on. Reliable automation depends on data that is clean, consistent, and accessible. Many organizations underestimate or skip this step because it feels tedious. The reality: Poor data quality is the leading cause of AI failures, with nearly 70 percent of companies saying bad data limits the value of their AI investments.

Data hygiene and governance

Before deploying agentic AI, confirm that the underlying data is accurate, secure, and compliant. Determine and document where the data came from, who owns it, and how often it’s validated. Weak governance opens the door to bias, drift, or compliance violations.

Data locations and accessibility

An agent can’t succeed if it can’t reach what it needs. Consider whether your data is spread across silos, trapped in legacy systems, or subject to access restrictions. Connectivity and interoperability are as important as quality.

Risks of bad data

Bias, hallucinations, and non-compliance often stem not from the model itself but from the weak, disorganized data beneath it. A well-known example: Amazon’s AI recruitment system had to be abandoned when it began favoring male candidates, simply because it was trained on biased historical data. Without strong data foundations, agents can replicate and amplify these errors at scale.

Identify critical fields

Focus on the top 10–15 data fields that drive decision-making in your process. Examples:

- Regulatory change monitoring: Regulation name, effective date, impacted business unit, compliance owner

- Resume-matching automation: Candidate skills, job requirements, years of experience, education.

- Customer support routing: Ticket category, urgency, customer ID, past resolution history.

Checking data foundations is not glamorous, but it is indispensable. By clarifying what data will be used, how it’s structured, and whether it can be trusted, you set the stage for agentic AI systems that are fair, compliant, and effective.

Step 5: Build in guardrails and governance from day one

Agentic AI can only be trusted if it operates inside a clear governance framework. Guardrails don’t slow down innovation; they ensure systems behave predictably, securely, and within acceptable risk margins. Governance should be designed into AI projects from the start, not bolted on later.

Governance readiness

Define policies, roles, and oversight mechanisms before launch. Make sure business, IT, and compliance leaders agree on who owns which risks, who monitors outputs, and how escalations will be handled.

Guardrails

Build technical and procedural safeguards, including:

- Escalation paths: Define when and how an AI agent must pass control to a human.

- Kill-switch APIs: Ensure agents will halt immediately if outputs deviate from expected behavior.

- Logging and traceability: Capture detailed activity logs so decisions can be audited.

Security considerations

Agentic AI introduces new risks that OWASP has mapped in its agentic AI threat model, such as tool misuse, memory poisoning, privilege compromise, and rogue agents. Mitigation strategies include strict permissioning, sandboxing, cryptographic logging, and anomaly detection.

Penetration testing and simulations

Don’t wait for real-world failures to expose weak points. Simulate breakdowns such as biased outputs, misrouted escalations, or model drift before deployment. Conduct drills that include both technical recovery steps and external communications to regulators, partners, or customers.

Additional governance and guardrail practices

- Data lineage control: Track where the data originated and what actions it went through; reject or quarantine unverified data.

- Bias testing: Run bias checks on both training data and model outputs. If statistically significant impacts are found, flag them for human review before action.

- Model documentation: Clearly document each model’s purpose, scope, limits, expectations, and data sources. Avoid “black box” models that lack human oversight or transparent explanations.

- Encryption: Encrypt sensitive data in storage and in transit. This simple safeguard significantly reduces risk exposure.

Step 6: Pilot and scale responsibly

The fastest way to build organizational confidence in agentic AI is through well-chosen pilots. An effective pilot shows value quickly, minimizes downside if it fails, and lays the foundation for scaling. Pilots aren’t the end goal; they’re the bridge between experimentation and enterprise-wide adoption.

Start with the right pilots

Look for workflows that are high-volume and high-value, but where failure won’t cause serious harm. Pilots should be important enough to demonstrate impact, yet safe enough to fail without damaging reputation or operations.

Short sprints, clear outcomes

Pilots should run on fixed timelines, often 12 weeks, so teams can test, learn, and make go/no-go decisions. Time-boxed sprints avoid the trap of “endless pilots” that consume resources without delivering clarity.

Measure the right KPIs

Four metrics create a solid view of pilot performance:

- Adoption: Are users choosing the AI workflow over the manual process?

- Cycle-time delta: How much faster does the work get done?

- Regret spend: How much investment went into failed pilots that weren’t shut down promptly?

- Green-data coverage: Is the pilot supported by clean, reliable data fields?

Scaling playbook

Use a maturity lens to decide how to expand. Pilots may start at the productivity level (drafting, summarizing, triaging), move into automation (end-to-end processes), and eventually progress to transformation (multi-agent systems that validate, escalate, and adapt). Scaling responsibly means advancing only when the previous tier is proven and trusted.

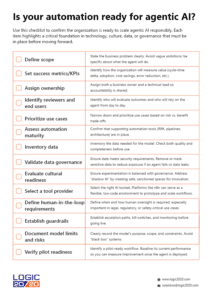

Final checklist

Use this checklist to confirm the organization is ready to scale agentic AI responsibly. Each item highlights a critical foundation in technology, culture, data, or governance that must be in place before moving forward.

The path forward with agentic AI

Agentic AI is a powerful enabler, but success depends on readiness. Start small with a focused prototype, such as a co-creation workshop, to validate feasibility, test governance, and build trust. Scale only when pilots prove both value and safety, and hold back when data, processes, or guardrails aren’t in place.

Trusted partners can accelerate the journey by designing secure, enterprise-ready programs that balance innovation with governance. As an n8n expert partner, Logic20/20 combines technical depth with business insight to turn agentic AI potential into measurable outcomes.

You might also be interested in …

What is agentic AI?

We define what agentic AI is, explore how it is evolving, and share practical steps for integrating it into your enterprise strategy.

Building a culture of AI experimentation

To scale AI impact, enterprises must build a culture where experimentation is structured, supported, and expected.

Beyond the POC: The five control planes of enterprise AI

5 control planes of AI that help organizations enforce safeguards, standardize operations, and ensure reliability at scale

Streamline operations with agentic AI automation

- Agentic AI use case discovery

- AI agent design and development

- Integration with enterprise systems

- Governance and deployment strategy